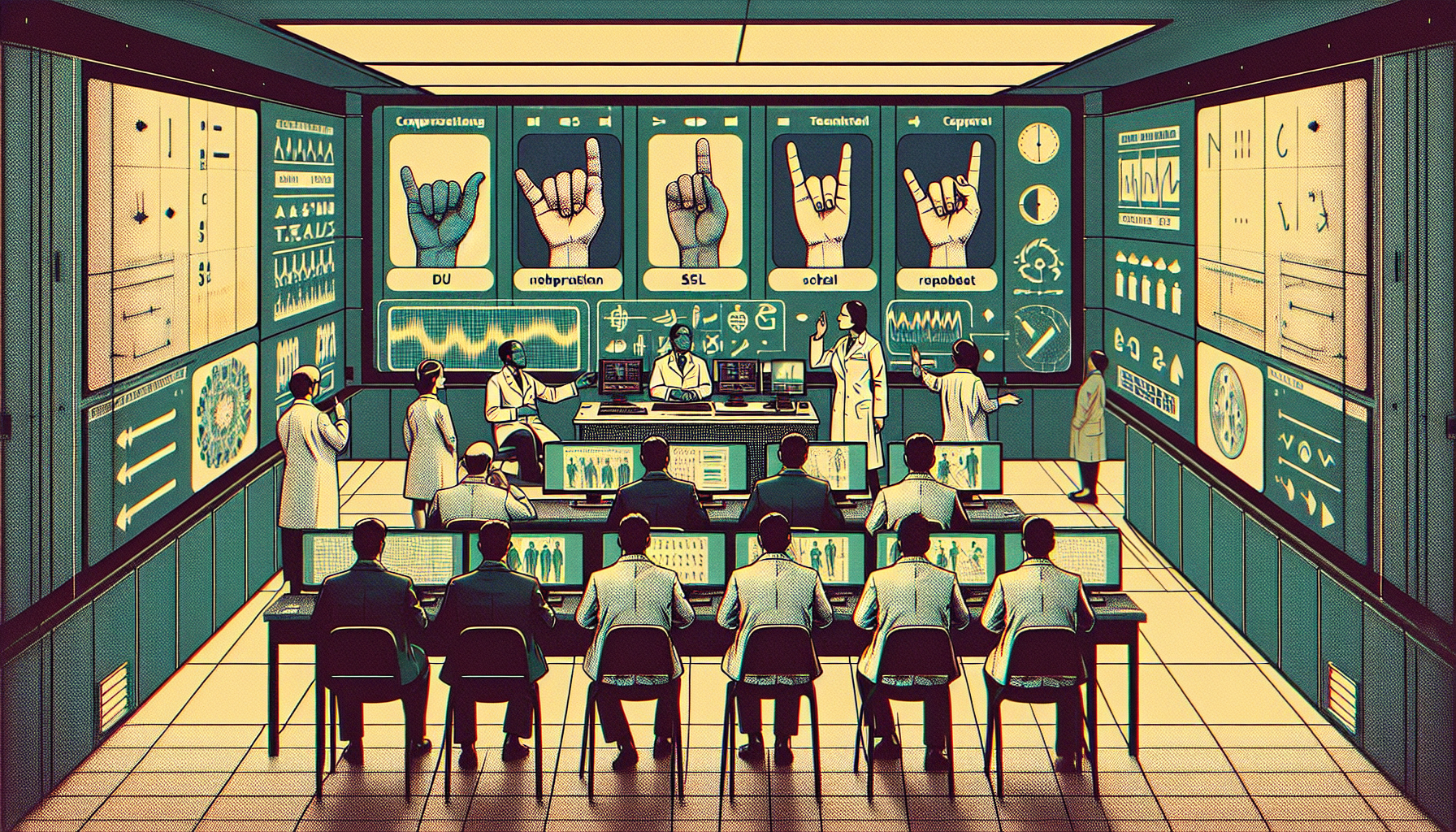

In a monumental achievement, researchers at Florida Atlantic University’s College of Engineering and Computer Science have unveiled a cutting-edge system with the capability to interpret American Sign Language (ASL) alphabet gestures in real-time. Utilizing sophisticated computer vision and artificial intelligence technologies, this system marks a significant leap forward in communication accessibility.

The Complexity of Sign Language

Sign language serves as a vital communication medium for deaf and hard-of-hearing individuals. Unlike spoken languages, sign languages rely on a seamless blend of hand movements, facial expressions, and body language to convey their rich meanings. Each has unique grammar, syntax, and vocabulary, and ASL is no exception. Its complexity reflects the need for custom solutions tailored to the linguistic intricacies of different sign languages worldwide.

The Research Breakthrough

The study, published in the Elsevier journal Franklin Open, detailed an ambitious project focused on accurately recognizing ASL alphabet gestures via a deep learning model. The innovative process involved creating an exclusive dataset comprising 29,820 static images of ASL gestures. These images were augmented with 21 pinpointed landmarks on each hand using MediaPipe, offering comprehensive spatial data vital for enhancing the precision of the deep learning model, YOLOv8.

Methodology and Results

Harnessing MediaPipe for meticulous hand movement tracking combined with the advanced training of YOLOv8 culminated in an exceptionally accurate system. The model achieved a remarkable 98% accuracy rate, with a matching recall rate, and a stellar overall performance score (F1 score) of 99%. It also reached a mean Average Precision (mAP) of 98% and a mAP50-95 score of 93%, reflecting its outstanding reliability and precision in ASL gesture recognition.

Technological Innovations

This model’s success is rooted in several pioneering advancements:

- Landmark Annotations: Using MediaPipe for precise hand landmark annotation, the model significantly improved in detecting subtle hand pose variations.

- Deep Learning: Finely-tuned YOLOv8, enhanced with tailored hyperparameters, demonstrated exceptional real-time gesture recognition capabilities.

- Transfer Learning: Strategic integration of transfer learning and precise tuning was pivotal in achieving the model’s high accuracy and reliability.

Practical Applications and Future Directions

This breakthrough has transformative potential for individuals who are deaf or hard of hearing, fostering more inclusive human-computer interactions. By accurately interpreting ASL gestures in real-time, new pathways open for more accessible technology use. Future efforts will aim to grow the dataset, encompassing a broader range of hand shapes and gestures, and refining the model to discern similar gestures more effectively. Additionally, optimizing deployment for edge devices will preserve real-time performance even in environments with limited resources.

Broader Context and Technological Advancements

This remarkable development is part of an ongoing surge in technological strides toward sign language interpretation. Various studies, employing diverse deep learning architectures like Squeezenet, have also achieved promising outcomes in ASL gesture recognition from simple RGB images on mobile devices.

Further innovations in natural language processing and AI have spurred breakthroughs in speech-to-sign language translation and real-time sign language transcription technologies. Systems like SLAIT, a real-time ASL translator, utilize AI for ASL text transcription and video communications, shrinking the communication gap between deaf and hearing communities.

Conclusion

The Florida Atlantic University study symbolizes a significant milestone in assistive technology. By marrying advanced object detection with landmark tracking, this achievement enriches communication accessibility and paves the path for further innovations in sign language interpretation. It heralds the arrival of a more inclusive and integrated global community.

Leave a Reply