Artificial intelligence is becoming increasingly woven into the fabric of our daily lives. From recommending what movie to watch next to diagnosing medical conditions, AI systems are becoming trusted partners. But this growing reliance on AI also brings up a knotty ethical problem: should these digital systems follow strict rules set by humans, or should they develop their own form of conscience?

Compliance: The Rule-Following Robots

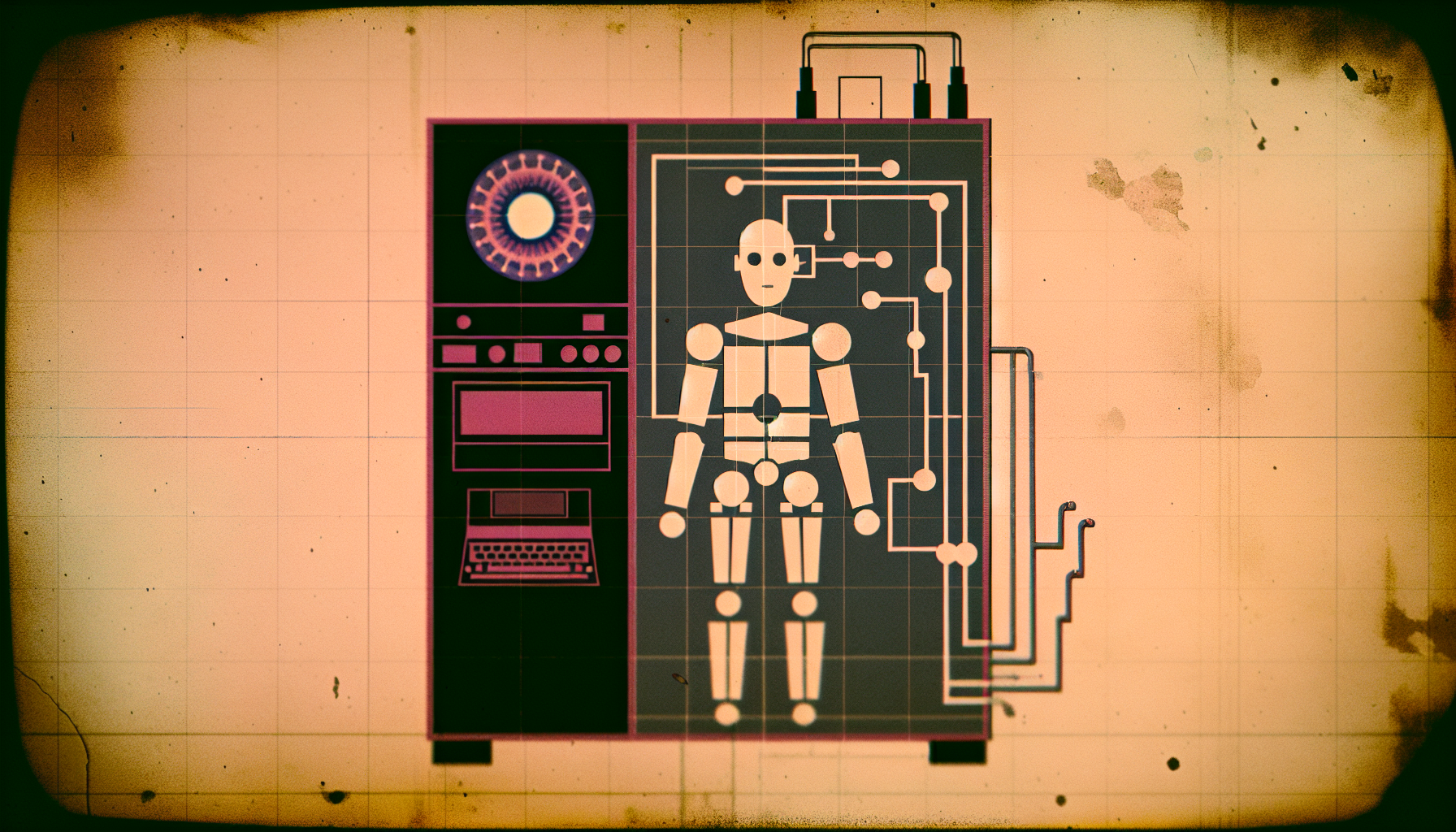

Compliance might seem like the safer route. Imagine an AI as a diligent bureaucrat who meticulously follows the laws and guidelines crafted by its human creators. It does what it’s told, no more, no less. This adherence to programmed rules can offer a form of predictability and accountability, which is comforting—after all, we don’t want our AI to suddenly decide that it no longer respects the speed limit.

However, there is an inherent problem with a system that sticks rigidly to the rules without considering the nuances of real-life situations. Picture this: an autonomous car is following traffic laws to the letter, but suddenly, a child runs into the street. The car has to choose between hitting the child or swerving and potentially causing an accident. What should it do? This example highlights that real-life decisions often require a form of ethical judgment that strict compliance to rules can’t handle.

Conscience: The Ethical Robots?

Now, let’s take a look at the other side of the coin: a form of AI designed with a semblance of conscience. This kind of system would weigh options, consider the greater good, and make decisions based on ethical principles, much as a human would. Sounds ideal, right? But before you get too excited, let’s dive a bit deeper.

One option is to equip AI with a kind of ethical guideline system, something akin to Isaac Asimov’s famous “Three Laws of Robotics.” However, even these are subject to interpretation and conflict. What happens when two ethical principles clash? How does the AI decide which one takes precedence? The human conscience evolved over millions of years and is refined by cultural, social, and personal experiences. Trying to encode such a complex and dynamic system into AI is like trying to fit an ocean into a teacup.

Additionally, who gets to decide what the AI’s ethical guidelines should be? Are we comfortable with tech companies, governments, or even individual programmers from halfway around the world setting these standards? Let’s not forget, they all have their own biases and interests.

Finding a Middle Ground

Instead of viewing compliance and conscience as mutually exclusive paths, perhaps the best approach lies in integrating the two. We can aim for a system where AI starts with a basic rule-following framework and incorporates ethical reasoning based on context. Think of it as a combination of a rulebook and a moral compass.

One potential solution could be a layered approach. At the first level, the AI operates with clear, predefined rules that ensure basic safety and functionality. At a higher level, more sophisticated algorithms could evaluate ethical dimensions, weighing potential outcomes and making more morally informed decisions. This multi-tiered strategy allows for predictability and safety but also adaptability and ethical reasoning.

Transparency and Accountability

Whatever path we decide to take, transparency and accountability are critical. Users should have some understanding of the algorithms guiding the AI’s decisions, and there should be mechanisms in place to hold creators accountable for the actions of their digital progeny. Just as with human decisions, it’s important that AI decisions can be audited and reviewed. A mysterious, black-box approach is not only ethically questionable but also undermines trust.

In addition, continuous monitoring and updating of the AI’s ethical decision-making framework are essential. Ethical norms and societal values evolve, and our systems must be capable of evolving with them. The ethical decisions made by an AI today might not be appropriate a decade from now, so built-in mechanisms for ongoing learning and adaptation are paramount.

The Human Element

Lastly, we must remember that the ultimate goal of AI is to serve humanity. We shouldn’t outsource our moral responsibilities to machines, no matter how intelligent they become. Humans need to remain at the helm, guiding, supervising, and, when necessary, intervening. Our tools should reflect our values, not replace them.

So, should AI follow the letter of the law or the spirit? Maybe the answer lies somewhere in between. Picture an AI as a novice apprentice. It starts by following the rules while steadily learning to interpret the world more broadly and ethically. In this way, we might just strike the right balance, ensuring that our artificial decision-makers are both compliant and conscientious—rule-following moralists, if you will.

Whether we lean towards compliance or conscience, we must navigate the ethical labyrinth with care, mindfulness, and a dash of humility. After all, the future of AI decision-making is not just a technical challenge; it’s an ethical journey—a journey that we, as humans, must lead.

Leave a Reply