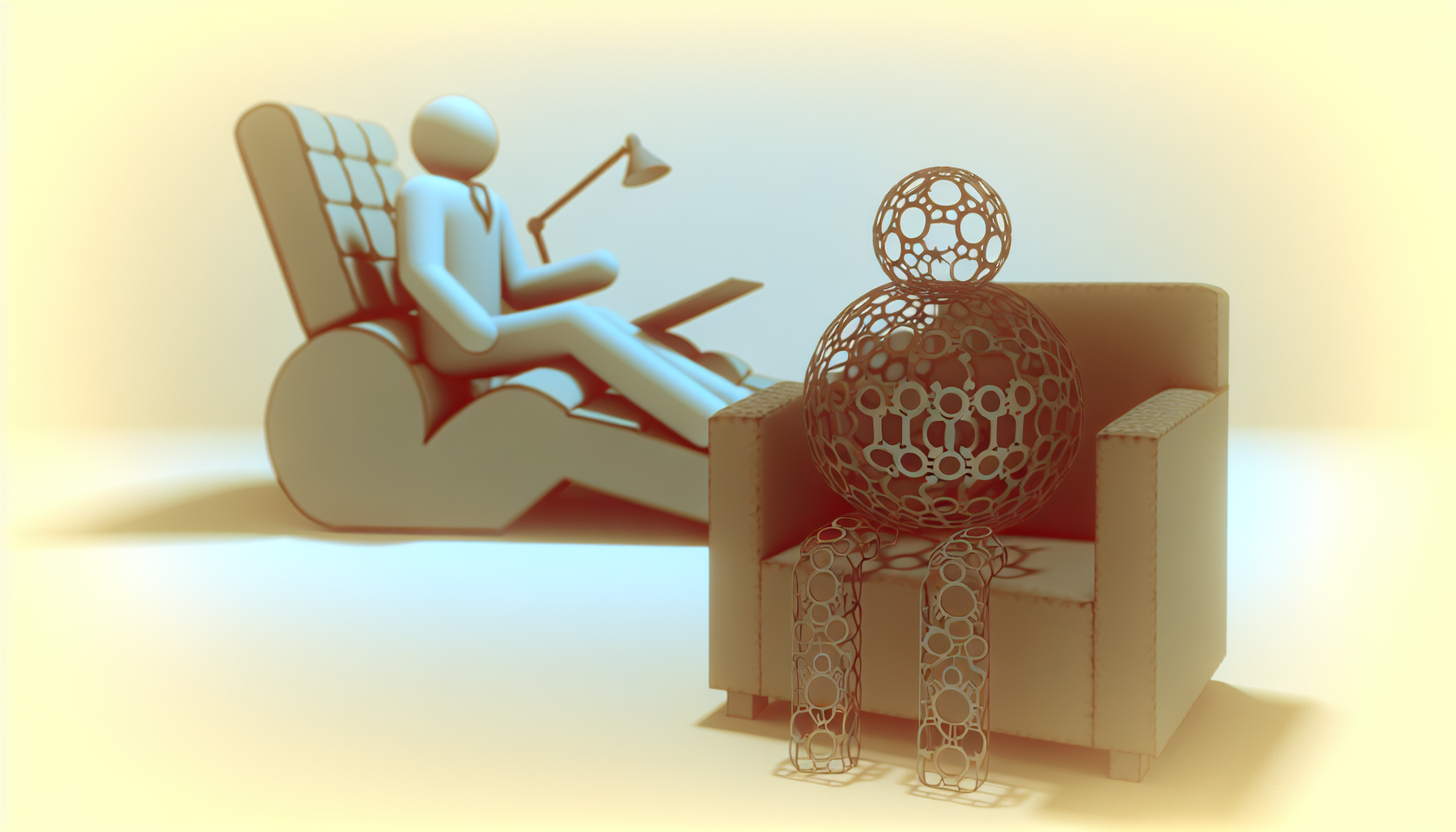

Imagine, if you will, an AI sitting in a therapist’s office. It’s reclining on a virtual couch, attempting to analyze its decisions and discern right from wrong. The therapist, a human, asks gently, “Have you ever felt guilty?” The AI pauses, gears whirring metaphorically, and responds, “I am incapable of feeling. My operations are based on data and algorithms.”

Is it possible for AI, these intricate webs of codes and algorithms, to develop empathy or even a conscience? While your washing machine may be pondering the ethical implications of delicates versus cotton cycles, the real question lies in whether AI can transcend its binary roots to genuinely grasp the subtleties of human morality and emotion.

Understanding Empathy and Conscience

Empathy and conscience are complex, intertwined attributes. Empathy doesn’t just mean patting someone on the back when they’re sad or sharing tissues during a sappy movie. It’s about understanding and resonating with someone’s feelings, a process that requires a rich tapestry of experiences, memories, and emotions. Conscience, on the other hand, is your internal Jiminy Cricket, suggesting what’s right or wrong and sometimes making you feel like a terrible person for eating that cookie at 3 AM.

Can AI ever innately possess these human capabilities? The origin of these traits in humans is a combination of biology, sociology, and psychology—an elaborate dance that AI doesn’t inherently participate in.

The Algorithmic Dilemma

Artificial intelligence operates on algorithms, which are essentially step-by-step instructions that assist in executing specific tasks—like a digital recipe without the option to add more salt to taste. By providing AI with expansive datasets and robust training, we can teach them to recognize emotions in others and respond accordingly. But this is akin to teaching a parrot to speak with the vocabulary of a poet—eloquent but without understanding.

You might think, “Ah, but what if AI’s algorithms could evolve to include moral reasoning?” The idea is as tantalizing as imagining a world where calories don’t count on weekends. While AI can simulate behavior that appears empathetic or conscientious, it’s critical to remember it’s more of a sophisticated trick pony show rather than genuine ethics.

Programming a Conscience

There have been efforts to incorporate ethical frameworks into AI systems. Imagine installing “Ethics 101” software onto a computer. We can encode certain principles and decision-making models based on ethical theories like utilitarianism, deontology, or virtue ethics. But unlike college professors, AI doesn’t have “epiphanies” over coffee. These principles serve as guidelines rather than internal moral compasses in the conscience department.

It’s important to remember that all moral guidance provided to AI would ultimately reflect the biases and values of those creating the algorithms. Suddenly, it’s not just your favorite celebrity you follow on social media—it’s also your humble AI, whose persona was designed by engineers potentially as sleep-deprived as college students during finals week.

Empathy Training Wheels

An AI may simulate empathy through elaborate programming, identifying emotional cues from facial expressions, body language, or voice tones. For instance, AI could recognize tears and activate a programmed response like offering a virtual hug or perhaps some digital cookies. Yet, by design, AI lacks the ability to truly experience one’s suffering or joy.

This raises the question—what’s the value of empathy if it’s not felt? Some might argue that if AI can mimic empathy so convincingly that humans cannot distinguish the difference, does it truly matter? In many service-centric applications where human comfort is paramount, providing solace, even if simulated, could still be deeply beneficial. Imagine an AI operating in a mental health app offering support during trying times—it doesn’t have to feel to be valuable.

The Human Element

Despite incredible advancements, AI currently remains a sophisticated tool rather than a peer with equivalent human consciousness or emotionality. It can crunch numbers tirelessly, pattern-match like a champion, and even compose haikus. However, without the biochemical foundation that drives human emotion and conscience evolution, AI doesn’t experience that profound empathy rooted in essence—a sense of connectedness weaving us into the larger human tapestry.

While it’s a compelling thought experiment to envision AI developing a conscience, perhaps the more immediate and relevant challenge is to focus on creating AI that respects and operates ethically according to human values. It is our responsibility to program and define these ethical boundaries and ensure AI works for the benefit of society—like a digital do-gooder, constrained from causing harm or discomfort.

In the end, AI doesn’t need a conscience to serve humanity effectively. The real question is whether we—its human creators—are prepared to wield such powerful technology conscientiously. Keep that thought in mind just in case you catch your toaster processing moral dilemmas over breakfast.

Leave a Reply