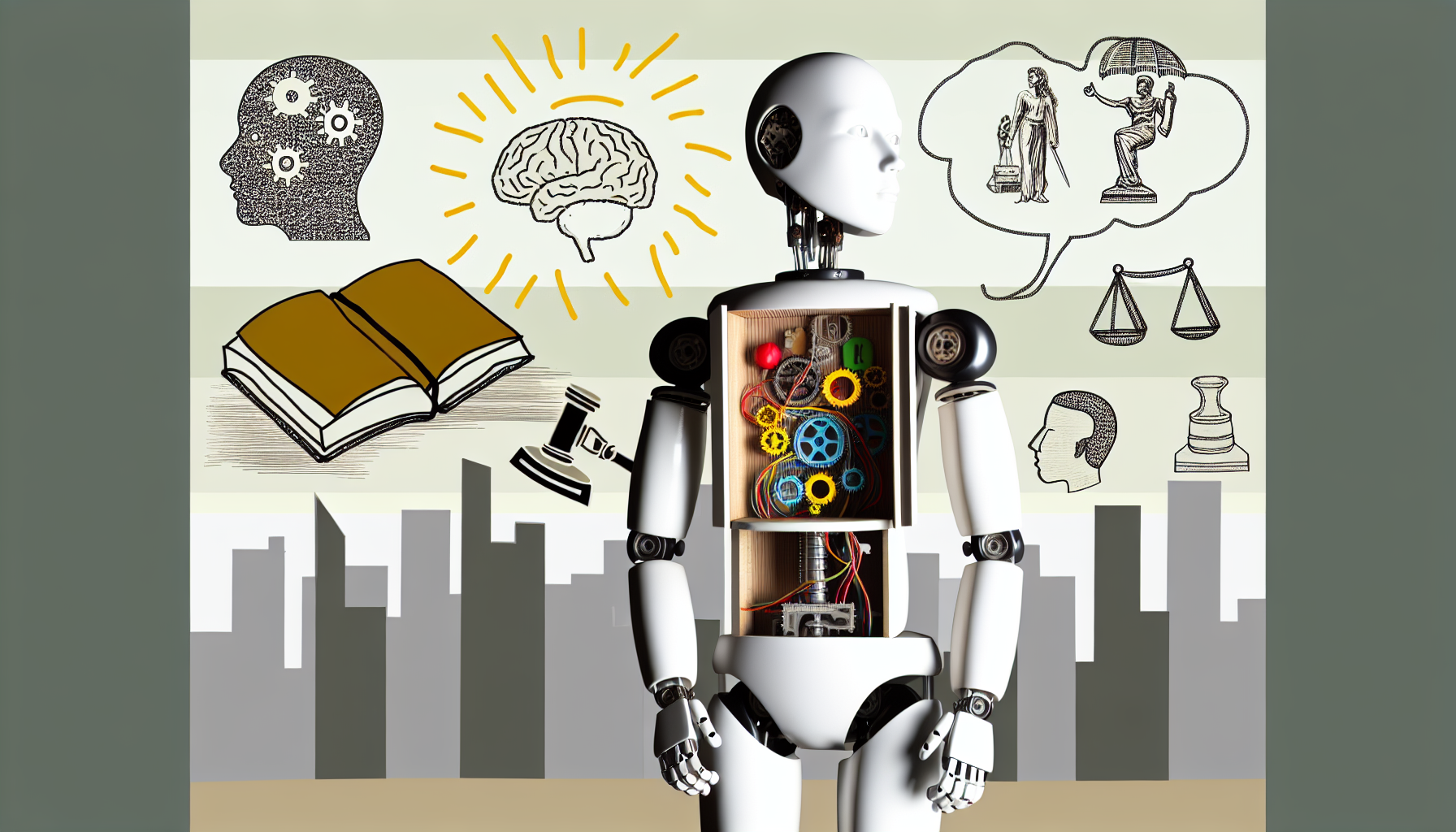

In the rapidly evolving world of artificial intelligence (AI), one of the most pressing challenges we face is known as the “alignment problem.” This problem revolves around ensuring that AI systems understand and operate in ways that are no only technically proficient but also ethically sound and in line with human values. As we delve deeper into this topic, it’s essential to understand both what the alignment problem entails and how it impacts our lives as we continue to integrate AI into society.

Understanding the Alignment Problem

The alignment problem stems from a basic question: how can we ensure that AI systems behave in ways that reflect human intentions and values? Unlike traditional computer programs, which follow clearly defined instructions, machine learning algorithms develop their own methods of problem-solving based on the data they are trained on. This means they can sometimes draw lessons from the data that diverge from our intended outcomes.

For example, consider an AI designed to optimize traffic flow in a city. If the AI is merely programmed to reduce travel time without understanding the broader implications of its recommendations, it might suggest routes that create significant environmental damage or neglect certain neighborhoods. In this case, the AI successfully meets its goal but fails to align with the values of sustainability and equity.

Why Alignment Matters

Aligning AI with human values is crucial because our decisions and actions have real-world consequences. As AI systems are increasingly integrated into various facets of our lives—ranging from healthcare and finance to law enforcement and entertainment—the need for alignment becomes even more pronounced. Poor alignment can lead to decisions that harm rather than help, undermine social trust, or perpetuate existing biases.

Take, for instance, facial recognition technology. While it can enhance security and protect against crime, improper alignment may result in a system that disproportionately targets certain demographics. This not only violates the principle of fairness but can also exacerbate social inequalities and erode public trust in institutions. Thus, the alignment problem is not merely a technical challenge; it is a moral imperative that we collectively face.

Bridging the Gap: Approaches to Alignment

To address the alignment problem, researchers and AI practitioners are exploring several strategies. One approach involves developing more transparent algorithms. Transparency means creating AI systems where the decision-making processes are understandable to humans. When users can see how an AI arrives at its conclusions, they can better assess whether it aligns with their values.

Another promising strategy is to involve diverse stakeholders in the development process. Many organizations are beginning to recognize the importance of diverse perspectives in forming AI values. By including input from various communities, developers can create AI systems that reflect a wider array of human experiences and concerns.

Furthermore, ongoing education about AI and its implications is vital. As we move forward, we must equip individuals not just with technical knowledge but also with an understanding of ethical and philosophical considerations surrounding AI. This will foster a culture that prioritizes alignment and encourages collaborative efforts toward designing AI systems that uphold societal values.

The Role of Regulation and Governance

As we confront the complexities of the alignment problem, regulation and governance play indispensable roles. Governments and institutions need to establish guidelines and ethical frameworks that influence AI development and implementation. Creating standards ensures that companies prioritize ethical AI practices and hold them accountable for their actions.

However, regulation must strike a balance. Over-regulating could stifle innovation, while under-regulating might lead to harmful practices. Achieving this balance requires dialogue among technologists, ethicists, policymakers, and the public. By collaborating, we can create robust governance frameworks that not only encourage innovation but also uphold human dignity and rights.

Looking to the Future

As artificial intelligence continues to advance seamlessly into our daily lives, the conversation around the alignment problem becomes increasingly urgent. It challenges us to consider what kind of future we want to build together—one where technology serves humanity or one where it operates in a vacuum, disconnected from our values.

To pave the way for a future where AI aligns with human values, we must commit to interdisciplinary research, public discourse, and ethical action. Let’s not see alignment as merely a challenge to be tackled but rather as an opportunity to construct a world where technology and humanity flourish side by side. We have the tools and knowledge; now, it’s about aligning them with our shared values.

Conclusion

The alignment problem is a critical issue facing not just AI developers but all of society. By striving for a deeper understanding of how to bridge human values and machine learning algorithms, we can work towards developing AI systems that truly reflect the aspirations and ethics of humanity. This journey will require effort, collaboration, and a vision rooted in shared progress. Together, we can ensure that as AI evolves, it does so in a way that benefits all of us.

Leave a Reply