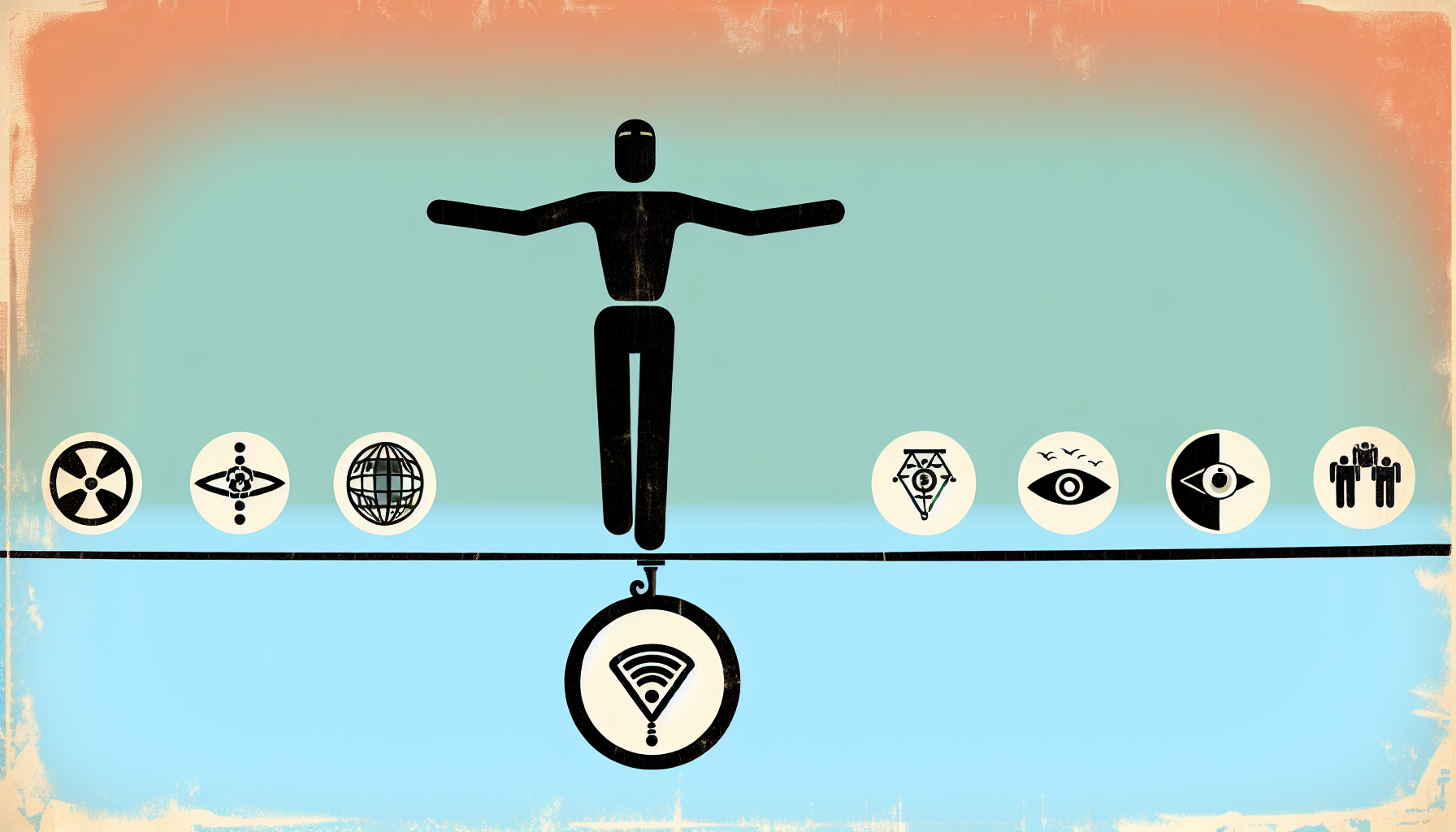

In a world where machines are becoming smarter than a university chess team, there’s a pressing question buzzing like a persistent fly: how do we ensure that these ultra-smart contraptions from the future are on our side? This is the ethics of AI alignment, the delicate art of balancing machine autonomy and human values. Imagine a tightrope walker balancing precariously between human flourishing on one side and a dystopian sci-fi film on the other.

Why the Fuss About AI Alignment?

Before you dismiss AI alignment as another tech-geek buzzword, let’s break it down. Let’s say a robot has as much autonomy as a teenager with their first taste of freedom. It can make choices, navigate complexities, and process more data in a blink than Swiss bank computers. But, if it’s not aligned with human values, it could metaphorically trip on a skateboard, causing chaos—intentionally or not.

If we leave machines to their own devices without a moral compass, we might wake up one day to find them optimizing for things like “maximum paperclip production,” at the expense of all life on Earth. Extravagant? Absolutely. Impossible? Less so. Ensuring AI systems abide by human values is crucial, lest they convert the planet into a paperclip factory. We need these advanced systems to be autonomous enough to solve problems but aligned enough to solve the right ones.

The Challenge of Defining Human Values

Now, let’s picture the United Nations of AI alignment, attempting to decide what human values are in the first place. Spoiler: it’s complicated. Human values are vast, diverse, and sometimes contradictory. They range from universal concepts like kindness and justice to subjective preferences like pineapple on pizza (a debate for another time).

The sheer complexity and fluidity of human values present a significant challenge in encoding them into AI systems. These systems need not just a rule book but a full-fledged encyclopedia of “humanity for dummies,” capturing the essence of being human without a robotic interpretation.

To make things trickier, human values evolve. The moral standpoints that seemed universally acceptable a century ago might raise eyebrows today. Smart machines need to adapt, learning to read between the ever-changing lines of human ethics as gracefully as a chameleon on a neon rainbow.

Machine Autonomy with a Sprinkle of Ethics

So, how do we give AI systems the autonomy they need while ensuring they operate within the ethical boundaries of the human values circus tent? Think of machines as culinary geniuses spicing up their recipes—but instead of paprika or cumin, we’re sprinkling a generous dose of ethics into their decision-making frameworks.

One approach is integrating ethical reasoning into AI systems’ algorithms. This involves embedding moral principles, beyond quantifiable metrics, into their decision-making processes. But it’s like teaching your cat to play the piano: challenging and likely to end with unintended outcomes.

We could employ human oversight, where AI systems operate freely most of the time but call in reinforcements—i.e., human experts—when a decision falls into a moral grey area. Think of it as a safety net, but one must hope that the human overseers have had enough caffeine and empathy in their systems.

The Need for Continuous Dialogue

When it comes to AI alignment, there’s no timeless golden rule. It requires ongoing dialogue among tech developers, ethicists, policymakers, and every concerned planetary citizen—especially the barista who correctly foretold the 2020s as a science-fiction chapter. It’s a collaborative effort that demands constant reevaluation of both technological capabilities and human moral landscapes.

By staying engaged in continuous conversations, society can ensure the alignment of AI systems evolves alongside our values. It’s like updating your phone apps—except instead of squashing bugs, we’re tackling ethical dilemmas one algorithm at a time.

Walking the Tightrope

Balancing machine autonomy and human values is an art, requiring equal measures of creativity, vigilance, and—let’s admit—a leap of faith. As we step further into an AI-infused future, our tightrope walk must remain steady and well-judged. We face a collaborative task that extends beyond the realms of technologists and ethicists to actively involve society at large, each doing our part to make sure our increasingly intelligent machines still prefer helping humanity over stockpiling industrial office supplies.

The ethics of AI alignment challenge us to construct a future where machines serve as allies, helping us improve our world rather than perplex it. With thoughtful consideration and diligent effort, we can steer this technological Titanic toward brighter horizons, avoiding the iceberg of moral debacle. Safe sailing, everyone!

Leave a Reply