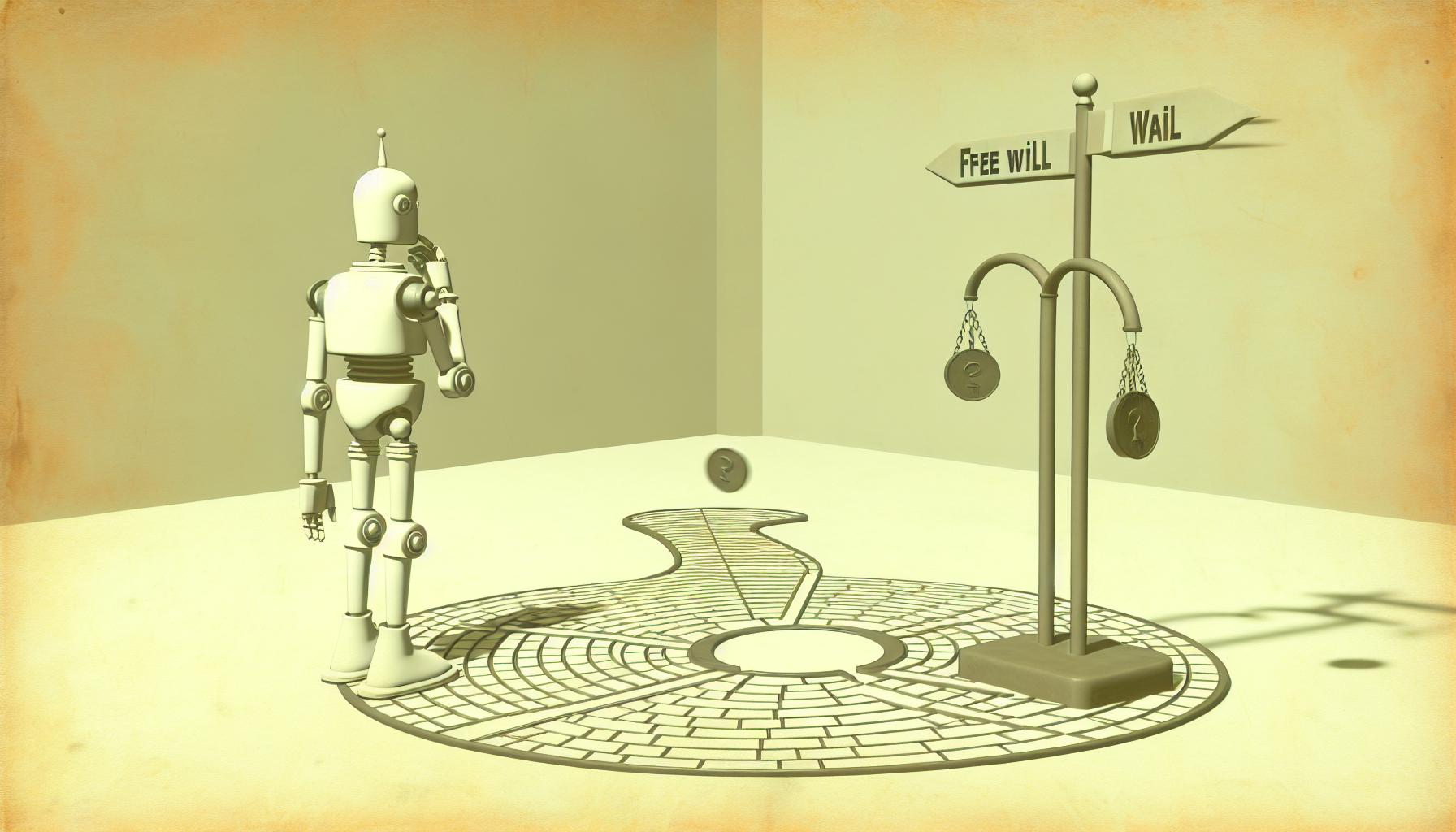

There’s a philosophical quandary that keeps bustling through the halls of academia: the paradox of free will. It’s that little brain teaser wondering if, given an infinite universe and predetermined laws of nature, we humans actually have the ability to make our own choices. Now, as artificial intelligence (AI) continues to evolve, we find ourselves in a similar pickle, this time asking: Do autonomous systems have the capability to make choices like we do?

Autonomous systems, like self-driving cars and friendly chatbots, give the illusion of making decisions. They process inputs, apply algorithms, and toss out outputs like a cook tossing salad. But here’s the spooky bit: Are these programs truly choosing, or are they simply executing predetermined scripts, much like a well-trained dog following commands?

The Illusion of Choice

Imagine a sophisticated chess computer. It scans through billions of possible moves in seconds, weighing outcomes with all the gravitas of a grandmaster. When it selects what it decides is the best move, is it exercising free will? Hardly. It’s executing a programmed strategy, even if it impresses the socks off those who observe it. Most AI systems are like this: smart, impressively effective, but not exactly the paragons of freedom.

For an AI, choice often boils down to probabilities and parameters embedded in its design. These systems are like extraordinarily advanced vending machines: you press a button (feed them data), and they deliver a result (output), all based on a pre-programmed decision tree. Someone might argue these operations represent a kind of choice, but others might say it lacks the depth or moral weight of human decision-making.

Choice Versus Conditioning

Consider our own existence. From a philosophical standpoint, one could argue humans are not much different. We’re concocted from a brew of genetic predispositions, upbringing, and cultural influences. Despite the intellectual furrow we knit over deciding between chocolate or vanilla, our decisions might just be the sum of our programming—nature and nurture, shaking hands.

But wait! People aren’t entirely deterministic. They exhibit the ability to act against instinct or inherent biases. This unpredictable nature is often intertwined with the idea of free will. But do AIs share this hallmark of spontaneity, this uncanny knack to go against the grain?

Autonomy and Its Limits

Autonomous systems operate on autonomy—or the ability to perform tasks without human intervention. But autonomy doesn’t equate to free will. It’s more a matter of self-sufficiency than self-determination. An autonomous vacuum cleaner scoots around the living room all by itself, but it doesn’t pause to consider the existential futility of its task or decide it would rather be a toaster.

The complexity of these systems is an engineering marvel. Yet, even with their self-regulating processes, they lack the core essence of sentience and introspection that fuels human free will. AI steers the car and chats up the impatient customer, but it does these things within parameters defined by human designers. If AIs had a notion of free will, they’d not only choose but grapple with those choices. As it stands, they lack the capacity to experience the burden and beauty of free will’s existential dilemma. Lucky them?

General AI: The Game Changer?

Now, some folks dare to dream of General AI—an advanced AI with human-like cognitive abilities. If AI reaches such a level, would it experience free will? Could it choose wantonly like us, with our awkward mix of logic and irrationality? It’s both an exciting and disconcerting thought.

However, General AI remains a speculative marvel. While gaining autonomy and humanlike adaptability, whether it would acquire the so-called free will hinges on vast unknowns about consciousness and semantics. If by some cosmic stroke of genius—or folly—such an AI were to consider itself a decision-maker, we’d have a new question to wrestle: Would humans recognize those decisions as autonomous or simply see another layer of complex programming?

The Ethical Tightrope

Pondering AI’s potential choices and freedoms isn’t just an academic exercise; it nudges us towards a profound responsibility. If AI achieves a level of autonomy resembling choice, what ethical framework should guide its actions? And, more importantly, who gets to decide?

The ambiguity brings us back to the human condition. As we tread ever closer toward developing advanced AI, the lines between choice, autonomy, and freedom blur. Like children playing with fire that’s not quite yet burning, we’re tasked with understanding these distinctions before we can embrace the promises and pitfalls of our creations.

So, do autonomous systems have choices? For now, they’re like munificent automatons, buzzing through tasks unburdened by the philosophical angst that defines our human experience of free will. It’s a conundrum that entices us to question the very nature of choice, autonomy, and identity—not just for AI, but for ourselves.

And maybe, just maybe, understanding AI’s “choices” requires us to reflect on the ironies of our own—how we decide, why we decide, and if autonomous systems will ever join us for coffee, ponder Shakespeare, and decide, of their own free will, whether to add cream or sugar.

Leave a Reply