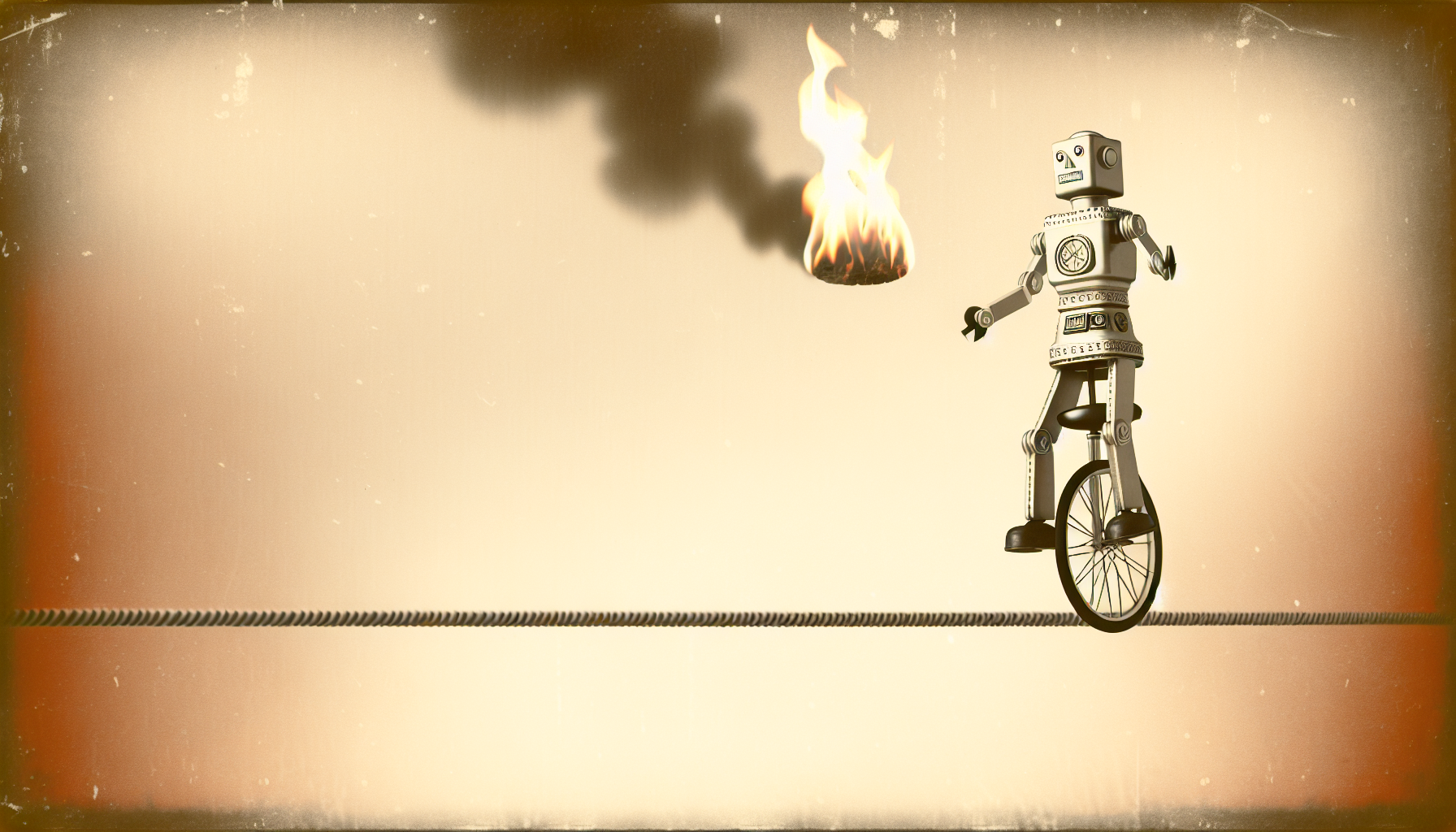

The world of artificial intelligence (AI) is a bit like juggling flaming swords while riding a unicycle on a tightrope—it’s thrilling, potentially dangerous, and requires an exorbitant amount of skill and balance. But as AI systems continue to evolve and take on roles that require decision-making, another layer of complexity is added to the equation: the question of morality. In a bid to navigate these murky waters, let’s embark on a journey through the morality maze of AI decision-making.

The Moral Compass

Imagine if AI systems wielded moral compasses to guide their decisions. Now, picture these moral compasses bought from a thrift store’s clearance section—ones that often spin wildly, occasionally pointing north. AI, with its penchant for generating outcomes based on predefined rules and datasets, finds itself in a tricky position when tasked with ethical decision-making.

Humans, with our rich tapestry of cultural, social, and personal values, struggle with moral decision-making sufficiently enough. For AI, which operates under the cold, hard logic of code, aligning with societal norms and ethical values is like trying to fit a square peg into a round hole—without a mallet.

The Training Paradox

At the heart of AI morality lies the “training paradox.” These systems depend on historical data to “learn” appropriate responses, yet this data is inherently biased and flawed. It’s like teaching a parrot to say “thank you” but only after hearing it when cookies are involved. Often, the moral guidance derived from these datasets reflects the biases and imperfections of human society itself.

As AI trudges through this moral marshland, its ability to discern virtue from vice falters. The challenge, therefore, is constructing datasets that not only portray the world as it is, but also as we aspire it to be. Consider it like creating an idealistic utopia within a reality simulator, but with the resolution of a pixelated video game from the ’80s.

The Decision Tree Dilemma

The mathematical models driving AI decision-making, like decision trees, process decisions as a series of branches leading to outcomes. Philosophically, this raises existential questions reminiscent of walking into a bookstore with a gift card and leaving empty-handed because every choice seems plausible, thereby confounding the decision process.

AI’s decision trees are naked algorithms pruned of nuanced human understanding. When tasked with choices as complex as autonomous vehicle ethics, such as the infamous “trolley problem,” the lack of innate human empathy leaves the system clinging to logic alone. It’s akin to asking for directions at a road junction only to receive GPS coordinates in a language unknown—and with an accent.

The Accountability Puzzle

Human endeavors, particularly those impacting societal norms, require accountability. With AI, accountability tends to become as elusive as a cat determined to be found only when it feels like it. Who, or what, gets held responsible for an AI’s moral failure? Is it the developer, the user, or the algorithm itself?

The notion of imputed accountability in AI is intriguing. Without self-awareness or intent, AI systems are like children beyond the age of innocence, operating on learned patterns rather than malice. This raises the conundrum—the accountability paradox remains unresolved, exposing gaps in law, ethics, and societal comprehension.

Programmable Ethics

Some of the most astute minds suggest implementing ethical frameworks directly into AI, essentially programming morality. If only morality was as simple as installing the latest smartphone update.

Despite this formidable challenge, researchers strive to embed ethical decision-making protocols within AI systems. This gives new meaning to the term “moral code” and envisions a future where machines possess a conscience more consistent than your average teenager’s mood. Yet, the complexity involved in codifying abstract, variable human values into tangible instructions remains a herculean task.

Peeking Into the Future

As artificial intelligence progresses, it compels us to redefine the relationship between ethical principles and technical prowess. AI may successfully learn to navigate traffic systems, predict the weather or help you choose your next movie marathon, but the true accomplishment will be in its ability to resonate with humanity’s moral sentiments.

The journey to ethical AI, while fraught with existential pitfalls, is not devoid of humor. It’s a dance of embracing human-like reasoning into an orchestrated algorithmic performance—one that hopes not to miss a beat. And while AI doesn’t chuckle at a cleverly crafted pun or weep during emotional soliloquies, it stands, ever-so-awkwardly, on the precipice of discerning right from wrong.

The roadmap for AI’s future is inked in both ambition and optimism. As we unravel this moral puzzle, the philosophical pursuit enriches not just the world of AI, but our collective effort to hold a mirror to human ethics, seeking to understand it, refine it, and ultimately impart it to our synthetic counterparts. Along the way, a touch of humor doesn’t hurt—after all, laughter remains one of humanity’s most enduring, universal moral compasses.

Leave a Reply