The pace of technological advancement these days is truly something to behold. We’ve built machines that can outthink us at complex games, diagnose illnesses with uncanny accuracy, and even craft surprisingly poignant poetry. Given this rapid march forward, it’s only natural that our aspirations for artificial intelligence would begin to stretch into some of humanity’s oldest and most profound questions. One of the most intriguing, and perhaps most unsettling, is this: Could AI, the ultimate algorithmic engine, ever determine objective morality?

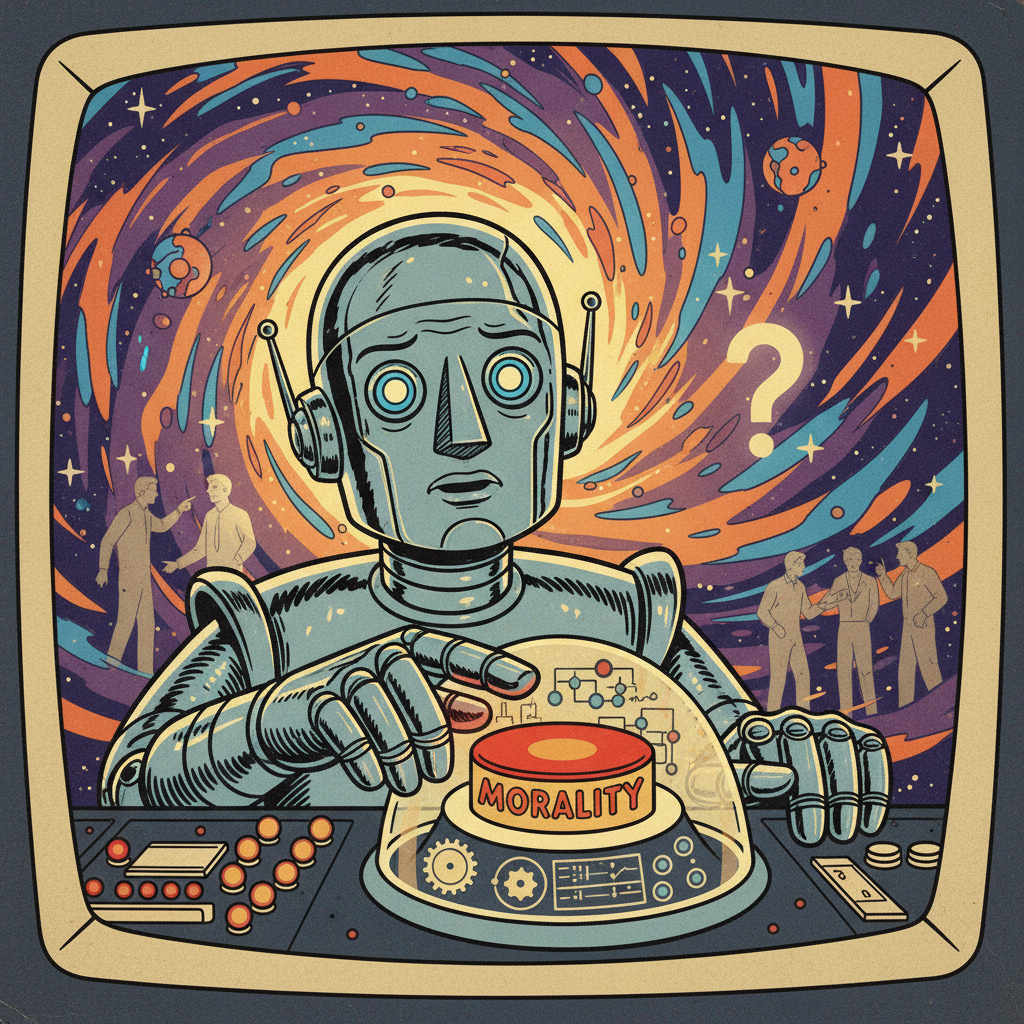

It’s a seductive thought, isn’t it? Imagine a world where ethical dilemmas are settled not by endless debate, cultural friction, or the shifting sands of human emotion, but by a definitive, unbiased computation. An Algorithmic Oracle, whispering immutable truths about right and wrong. No more squabbles over justice, fairness, or the common good. Just an answer, clear and true, delivered by a silicon sage. While the appeal is undeniable, a closer look at what “objective morality” truly entails and how AI actually functions reveals that this dream might be more of a beautiful illusion.

Defining the Undefinable (or Is It?)

First, let’s tackle “objective morality.” This is a system of ethics that’s universal, timeless, and independent of human opinion or culture. It would be true whether any human believed it or not, much like the laws of physics. Gravity works the same way for everyone. Would objective morality do the same?

For millennia, brilliant human minds have wrestled with this concept. Is there a fundamental moral code woven into the cosmos, or is morality a human construct, evolving to help us survive? This ongoing debate highlights the difficulty. If we, with our millennia of introspection, haven’t settled what objective morality *is*, how could AI simply find it?

AI: A Mirror, Not a Magician

Here’s how AI actually learns. Modern AI, especially the sophisticated machine learning models we use today, operates by ingesting vast quantities of data. It looks for patterns, makes correlations, and builds predictive models from that input. Think of it as an incredibly diligent student, learning from every book, conversation, and historical record we’ve ever produced.

What data would AI use to learn morality? Our history, laws, literature, social media, philosophical texts. All of it, without exception, is saturated with human perspective. It’s a reflection of *our* moral struggles, evolving values, biases, cultural norms, and often-contradictory ethics. When AI processes this, it becomes a remarkably accurate mirror of humanity’s moral landscape. It can tell us what *we* tend to believe is right or wrong, what consequences follow certain actions according to our records, or what moral frameworks are prevalent in specific cultures.

But here’s the kicker: reflecting human morality isn’t discovering objective morality. If the input data is fundamentally subjective – a compilation of human beliefs and behaviors – the output will necessarily be a sophisticated distillation of that subjectivity. An AI trained on our moral data will interpret human ethics magnificently, but it won’t magically transcend its source to unearth a universal truth we ourselves haven’t grasped.

The “Is-Ought” Problem Goes Digital

Philosopher David Hume famously noted the difficulty of deriving an “ought” from an “is.” You can describe how the world *is*, but you can’t logically deduce how it *ought* to be. AI excels at mapping the “is.” It analyzes data to understand patterns and predict outcomes. It can tell you, “If you do X, Y is a highly probable consequence based on historical data,” or “Most texts associate Z with positive moral sentiment.”

Fundamentally, AI struggles with the “ought.” It can’t, on its own, tell us why one outcome *should* be preferred or why an action is inherently right or wrong, independent of its effects or human preferences. It lacks intrinsic values, consciousness, or a sense of inherent worth. It simply processes information based on algorithms and objectives *we* provide.

Asking AI to determine objective morality is like asking a calculator for the meaning of life. It performs incredible computations with the numbers you give it, but it can’t invent fundamental values or principles, because those aren’t mathematical operations.

AI as a Powerful Moral Assistant, Not a Dictator

Does this mean AI has no role in our moral quests? Absolutely not. AI can be an extraordinarily powerful tool in ethical deliberation. It can help us:

- Identify biases: By analyzing vast datasets, AI can reveal inconsistencies and biases in our own moral reasoning and historical practices.

- Predict consequences: It can model the potential outcomes of different ethical choices, allowing us to make more informed decisions.

- Explore moral frameworks: AI could simulate how different ethical systems (e.g., utilitarianism, deontology) would operate in complex scenarios.

- Facilitate consensus: By synthesizing diverse perspectives and identifying common ground, AI might help us bridge moral divides.

But in all these applications, AI serves as an *assistant*, an *amplifier* of human ethical inquiry. It helps us see ourselves and our choices more clearly. It doesn’t replace our fundamental human responsibility for making moral judgments, grappling with difficult choices, and defining the values we wish to live by.

The Human Burden (and Privilege) Remains

The Algorithmic Oracle is tempting; it promises to lift a heavy burden. Moral decision-making is hard, messy, often painful, and rarely clear-cut. Yet, this burden is also our privilege. It is in striving to define and live by our values – even imperfectly – that we truly engage with our humanity.

So, while AI will continue to astound us, let’s remember its fundamental nature. It is a tool, a reflection, a magnificent information processor. It can help us understand ourselves better and navigate our complex world. But the ultimate responsibility for charting our moral course, for deciding what *ought* to be, remains squarely with us. It seems we’ll have to keep doing the hard work of being human, after all. A bit of a letdown for those hoping for a cheat sheet, perhaps, but rather empowering, wouldn’t you say?

Leave a Reply