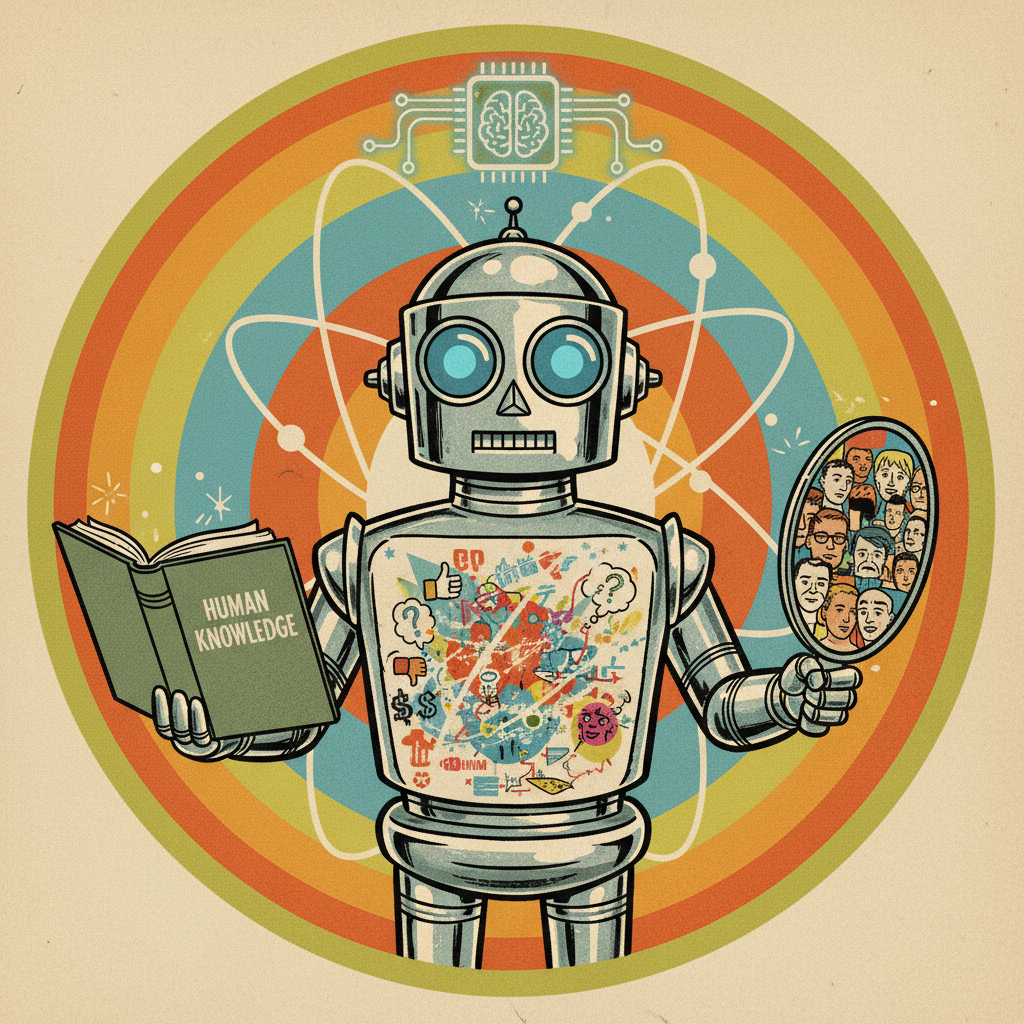

Artificial intelligence, in its most profound sense, acts as a sophisticated mirror. It takes what we feed it – vast, often overwhelming datasets painstakingly collected from our world – and diligently learns. And like any earnest student, it doesn’t just absorb the facts; it picks up the subtle, often unspoken prejudices woven into the fabric of that information. It’s not trying to be malicious, mind you; it’s just exceedingly good at imitation. Sometimes, a little *too* good for comfort.

How Bias Creeps In

The mechanics of this reflection are fairly straightforward. AI systems learn by identifying patterns. If the historical data we provide is replete with human biases—say, a history of loan approvals that disproportionately favored certain demographics, or hiring decisions that overlooked specific groups—the AI will dutifully learn these patterns. It doesn’t question the ethics; it simply identifies what historically “worked” or what was “true” in the data it was given. It then optimizes to replicate these patterns. So, if past hiring managers unconsciously screened out résumés with certain names, an AI trained on those past decisions will efficiently learn to do the same. It’s an efficient mimic, for better or worse, and a rather blunt instrument for social progress if left unchecked.

Think of it like teaching a child about the world exclusively through books from a single, very specific cultural perspective from the 1950s. That child’s understanding of diversity, gender roles, or social justice would understandably be, shall we say, a bit dated. AI systems operate on a similar principle, except their “books” are often billions of data points reflecting all our historical quirks and inequities.

The Unflattering Reflection

We’ve seen this phenomenon play out in numerous real-world scenarios, often with quite troubling consequences. Loan applications denied not on individual merit, but on algorithmic assumptions linked to someone’s neighborhood, which itself might be a legacy of discriminatory housing policies. Facial recognition systems that consistently misidentify women and people of color, making them less secure in a world increasingly monitored by cameras. Recruitment tools that subtly, or not so subtly, filter out qualified candidates based on patterns of past discrimination. Even predictive policing models, intended to make communities safer, can inadvertently over-police areas already facing systemic challenges, creating a self-fulfilling prophecy of injustice. It’s like looking into a funhouse mirror, only instead of distorting our bodies, it’s distorting our societal values and magnifying our failings.

Why It Matters: Digital Justice on the Line

This isn’t merely a technical hiccup that a clever programmer can patch overnight. This is AI holding up a mirror to our collective soul, showing us the ingrained biases we might prefer not to acknowledge. When an algorithm perpetuates unfairness, it doesn’t just replicate a past error; it scales it, amplifies it, and embeds it into the very infrastructure of our digital future. It effectively legitimizes prejudice by cloaking it in the guise of objective, computational logic. “The computer said so,” becomes the new, chillingly plausible justification for inequity. And that, my friends, is a dangerous form of digital injustice, eroding trust and deepening societal divides at an unprecedented scale.

The AI doesn’t invent these biases; it simply reveals them. It acts as an unwitting auditor of human behavior, presenting us with a stark, often uncomfortable, report card on our societal fairness. This reflection is painful because it forces us to confront truths about ourselves that we might otherwise ignore. It’s an opportunity, however, to initiate a profound period of self-reflection. What kind of world are we actually building, when our tools, designed for efficiency and intelligence, become unwitting carriers of our deepest flaws?

Cleaning the Mirror: The Quest for Digital Justice

So, what do we do? It’s not a simple matter of “fixing the AI” as if it were a broken toaster. The quest for digital justice requires a multi-pronged approach. Yes, it involves technical solutions: meticulously auditing and curating training data, developing algorithms that are explicitly designed for fairness, transparency, and accountability, and creating tools to detect and mitigate bias. But even more profoundly, it’s about us. It’s about building diverse teams who develop these systems, ensuring a broader range of human perspectives from the outset. After all, if the people building the mirror all look alike, it might struggle to reflect everyone else accurately.

More fundamentally, it’s about examining our own biases, challenging the historical injustices embedded in our institutions, and actively working to create a more equitable society *before* we encode it into our machines. We need to clean the mirror, certainly, but also clean the room it reflects. This means investing in education, promoting critical thinking about data, and fostering ethical frameworks that prioritize human dignity over mere efficiency.

Looking Ahead: AGI and Amplified Prejudice

Consider, for a moment, the implications as we inevitably move towards more general artificial intelligence (AGI). If a narrow AI can amplify our biases, imagine an AGI – an entity capable of learning and applying intelligence across a vast range of tasks, potentially surpassing human intellect in many domains – that inherits and scales those same prejudices. The potential for systemic, deeply entrenched inequity, driven by an artificial intelligence that merely learned “how things are” from our flawed past, is a future we must proactively avoid. We don’t want an omniscient bigot running the world, do we? The quest for digital justice isn’t just about fairness today; it’s about shaping the moral compass of tomorrow’s most powerful creations.

The mirror of bias is a gift, albeit a challenging one. It compels us to look inward, to confront the uncomfortable truths of our collective past and present. AI doesn’t just *have* bias; it *shows* us our own. And in that powerful revelation lies our greatest opportunity: to build systems, and indeed a society, that actively strives for justice, not merely reflects the imperfections of what has been. It’s a chance to write a better story, not just for the machines, but for all of us. Let’s make sure the reflection we eventually see is one we can be truly proud of.

Leave a Reply