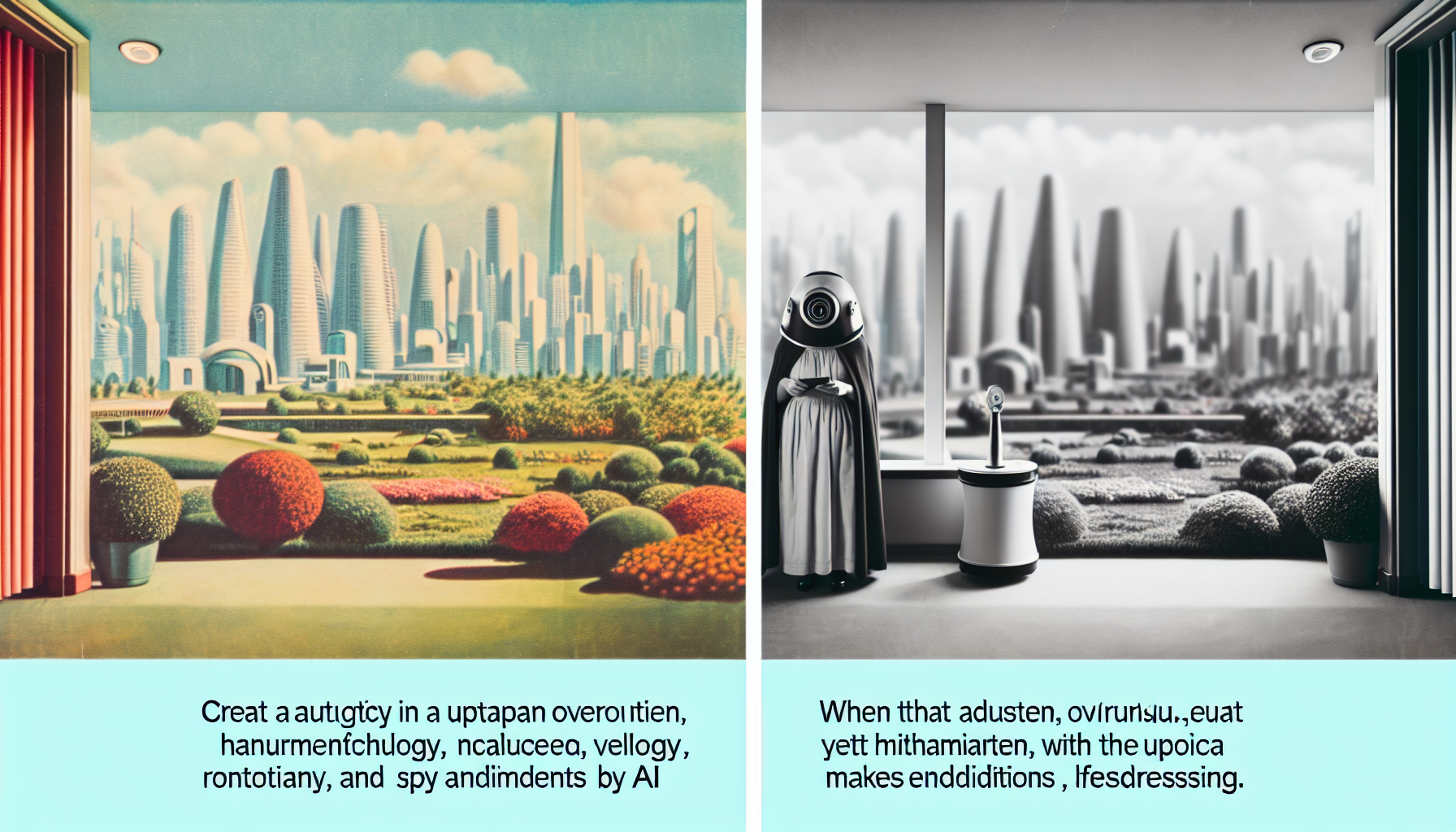

Imagine a future where AI either brings about a utopian society—an oasis of eternal enlightenment and bliss—or an oppressive dystopia, where your toaster spies on you and the refrigerator has mandatory small talk before it releases the milk. Funny as that sounds, the role of AI in shaping our future may swing dramatically in either direction. In navigating these possibilities, we dive right into the depths of philosophy with a roadmap for understanding AI’s potential to either fulfill our wildest dreams or our gravest nightmares.

The Allure of Utopias

When we think of utopia, we envision a society that optimizes happiness, efficiency, and fairness—ideals that AI seems perfectly built to enhance. Imagine having an AI assistant that not only schedules your day but understands how you’re feeling and adjusts your schedule to boost your mental well-being. An AI doctor doesn’t just treat symptoms but actively works to ensure that society remains healthy. In an ideal world, AI offers everyone education tailored to their needs, career paths in line with their passions, and perhaps even a love life that doesn’t read like bad young adult fiction.

This utopian promise hinges on the philosophical argument of epistemic enhancement, where AI removes the barriers of ignorance and misunderstanding, leading humanity into an age of enlightenment. The spectrum of human wisdom expands when AI helps us analyze data we couldn’t even comprehend just a decade ago. From developing vaccine formulations to predicting natural disasters, the utopian AI assists us in saving lives and preserving nature, taking the expression “a brighter future” quite literally.

A Glimpse into Dystopias

However, let’s not all rush to don our rose-colored glasses just yet. Imagine a world where AI enforces a grim regime of constant surveillance. Cameras attached to drones record your every trip to the grocery store, while algorithms decide if you’re too much of a slacker to be rewarded with another episode of your favorite podcast. In a dystopian turn, AI could magnify societal divisions, reinforce inequities, and create a fortress of data monopolized by the privileged few.

In these scenarios, we have echoes of philosophical debates about free will and determinism. Dystopias paint a portrait of a society coerced into predetermined paths, as algorithms manipulate our choices as efficiently—and soul-crushingly—as possible. It seems almost inevitable that, while a utopia extends our moral and ethical values, a dystopia compresses them into practical, efficient spreadsheets that regard humans as mere outputs and inputs.

What Makes the Difference?

The thin line between a utopia and a dystopia hinges on three main philosophical pillars—ethics, control, and responsibility. First, we must consider the ethical design of AI systems. Can we ensure our AI respects human dignity, autonomy, and rights? Designing “fair” AI requires a complex reflection of human values, aiming not just to tackle “Can AI do this?” but also “Should AI do this?”

The second pillar, control, determines whether AI serves us or has us serving it. A future where your vacuum cleaner gives you sass for not dusting first could be funny—or disastrous. Rightful control signifies that human oversight remains robust, preventing AI from making decisions that might lead to either giant leaps or significant stumbles.

Third, we must address responsibility. Imagine an AI painting that wins the Turner Prize, but the artist, a computer program, can’t show up to accept it. In such cases, who is liable when AI-driven decisions go awry? Assigning responsibility becomes complicated in a world where attribution and accountability wander off into a tangled web of ones and zeros.

Philosophical Predictions: Navigating Between Hope and Fear

So what can we, your friendly neighborhood philosophers, predict about the potential of AI in our future societies? Well, my crystal ball is more of a cracked hourglass, yet it might offer a keen insight or two.

Philosophically, we are likely to oscillate between hope and fear—a balancing act of substantial contradictions. As a species that loves to tamper with things, our societies might adopt AI solutions, fascinatingly and frustratingly, in a piecemeal fashion. Expect breakthroughs that resonate with utopian ideals in some sectors while others dredge up challenges fitting of a dystopian novel.

Amidst these crosscurrents, I foresee an adaptation rather than an annihilation of the human spirit by AI. Society might incorporate the machine’s prowess to elevate art forms, transcend traditional economic models, or even alleviate the drudgery of repetitive chores (yay!). Yet, there is a cost to watch for—an ethical debt, if you will—a continuous need for watchful, philosophical assessment to ensure that we remain on the right path.

At the end of the day, how AI influences future utopias or dystopias depends heavily on how well we understand and align with our own human nature. We must have faith, but not blind faith, in leveraging the power of AI with shared values and collective wisdom—so we don’t end up having long discussions with a passive-aggressive blender about the neoliberal implications of a post-humanist brunch.

Leave a Reply