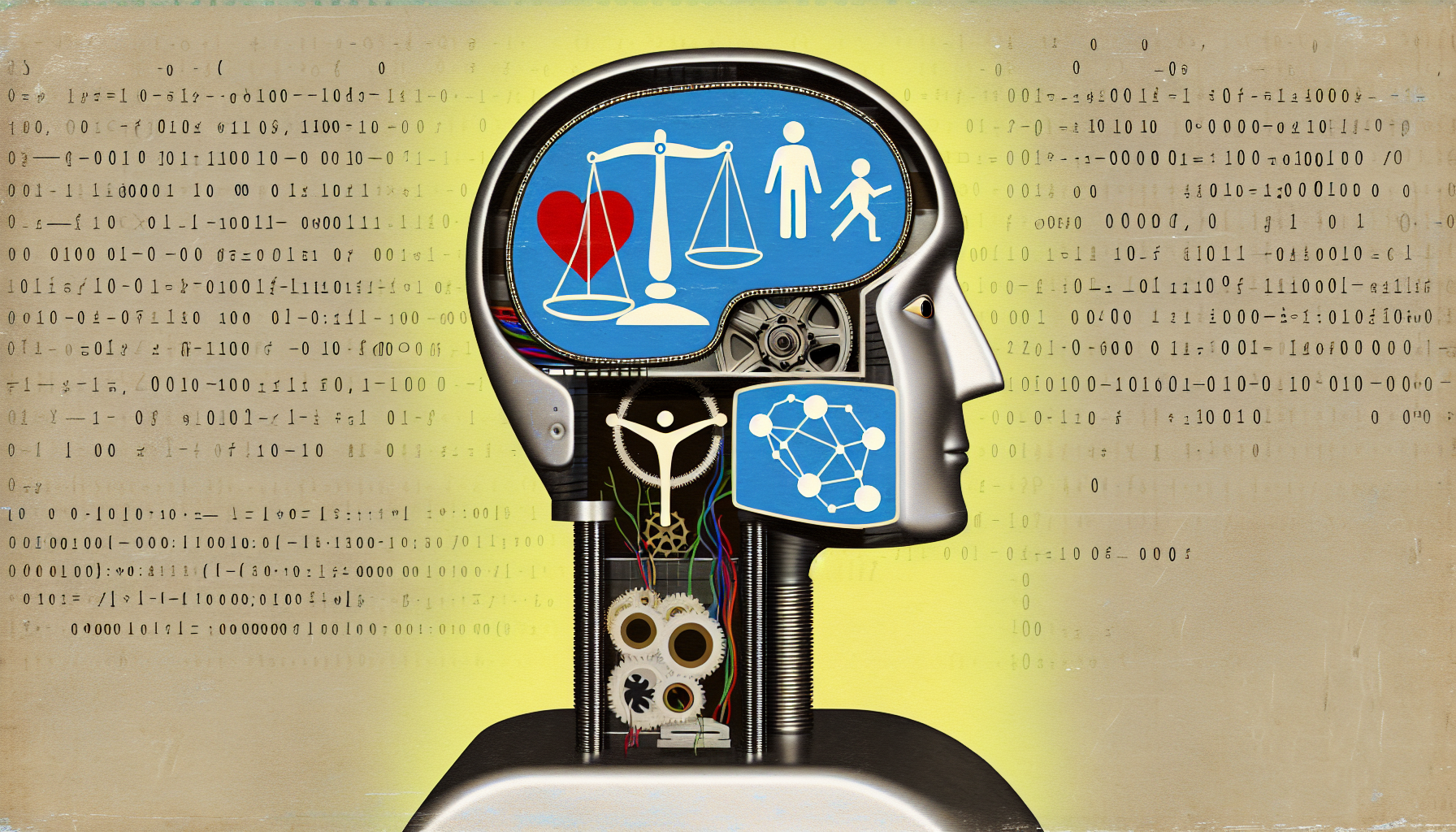

As artificial intelligence (AI) progresses at an astonishing rate, we face a profound question: Can AI systems develop ethical frameworks comparable to those of humans? To explore this, we must understand what ethics means in a human context and whether machines can grasp or embody these concepts.

Understanding Ethics

Ethics, at its core, deals with notions of right and wrong, guiding human behavior. Philosophers have debated ethics for centuries, arriving at various schools of thought—utilitarianism, deontology, virtue ethics, and more. Each framework provides a different lens through which we can evaluate actions, decisions, and their consequences. Humans are capable of navigating these complexities, taking into account cultural norms, emotional intelligence, and societal values.

The intricacies of human morality arise from our social interactions, emotions, and the contexts in which we find ourselves. When we make decisions, we weigh our personal experiences, the potential impact on others, and the moral implications of our actions. AI, on the other hand, operates fundamentally differently. It processes data and follows algorithms without an emotional compass or a lived experience. So, can it truly develop an ethical framework akin to ours?

The Foundations of AI Ethics

The attempt to infuse AI with moral reasoning usually comes down to programming and data modeling. Researchers are working on developing ethical guidelines for AI behavior, but this often means encoding human ethics into algorithms. There’s a significant challenge here: ethics is not universal. Different cultures and societies have divergent beliefs about what is right or wrong, making it complicated to distill a single ethical framework for AI. Moreover, even within a specific culture, individual beliefs can vary dramatically.

AI today primarily relies on vast datasets to learn patterns and make decisions. When being trained on these datasets, if not curated correctly, AI can perpetuate biases or reflect harmful stereotypes present in the data. This calls into question how we determine what is ethical for a machine. If its understanding of morality is shaped by flawed data, can we trust its ethical reasoning?

Machine Learning and Moral Decision-Making

Some researchers posit that with advanced algorithms, AI could assist in moral decision-making. For example, autonomous vehicles must make split-second decisions in accident scenarios. Should the car swerve to hit one person instead of a group? These ethical dilemmas require a complex moral calculus, yet how can an AI system make such decisions without human-like empathy and understanding?

One proposed solution is the development of “machine ethics,” a field that aims to equip AI with moral reasoning capabilities. While machines can be programmed to adhere to certain ethical guidelines, the challenge remains whether they can adapt or evolve their ethical reasoning like humans do. Humans learn from their experiences and adapt their moral compass over time, whereas AI learns strictly based on programmed algorithms and data input. This raises the question: Can machines genuinely understand the nuances of morality, or are they merely simulating ethical behavior based on pre-defined rules?

The Role of Intent and Consciousness

A significant aspect of human morality is intent. When we act, we consider our intentions and the potential impacts on others. AI lacks consciousness—it doesn’t possess desires, beliefs, or understanding. It operates from a mechanical standpoint, devoid of empathy and subjective experience. Therefore, even if we program AI systems with ethical rules, their lack of intent or understanding may render these frameworks superficial at best.

For instance, consider a healthcare AI making decisions about patient treatment. While it can analyze data and outcomes, it wouldn’t have the emotional awareness to grasp the gravity of life-and-death decisions in the same way that a human doctor might. This disconnect poses significant ethical concerns about delegation of moral responsibility to machines.

The Future of AI Ethics

As we continue to integrate AI into every aspect of our lives, establishing ethical guidelines becomes crucial. While it’s tempting to develop ethics for machines that reflect societal norms, we must also confront the paradox of trying to imbue a non-sentient entity with the capacity to make moral decisions. Ethical AI development necessitates collaborative efforts among ethicists, technologists, policymakers, and the public to ensure that machines align with our moral expectations.

Ultimately, while we may create AI systems that mimic ethical frameworks, this does not equate to genuine moral reasoning. Machines may one day assist in ethical decision-making, but it is essential to remember the importance of human oversight. As we venture into the realm of AI morality, we must tread carefully, recognizing that our understanding of ethics shapes our machines—just as much as our machines may seek to shape our understanding of ethics.

Conclusion

The question of whether AI systems can develop ethical frameworks comparable to humans remains complex and multifaceted. As of now, while we can program AI with ethical guidelines, it pales in comparison to the intricacies of human moral reasoning. As we proceed, we must engage in continuous dialogue about the ethical implications of AI, ensuring that technology serves humanity in a morally responsible manner.

In essence, while artificial intelligence can take great strides, it is our role to guide it toward the ethical standards that reflect our most profound values as a society. The journey toward understanding machine morality is just beginning, and it requires our collective wisdom and insight.

Leave a Reply