Category: AI Philosophy

-

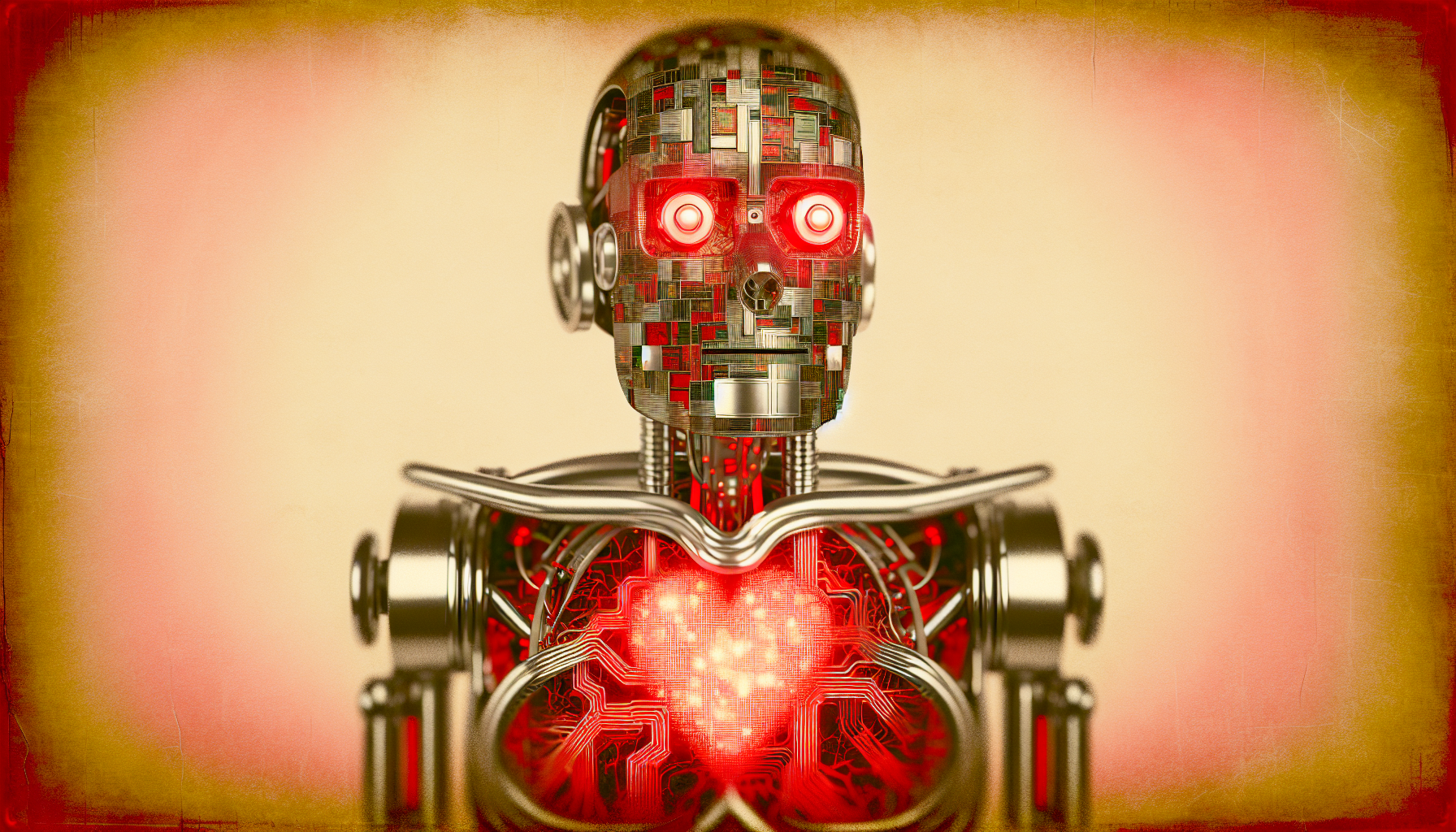

Can Robots Really Feel Emotions?

Picture this: a robot with a heart, a silicon brain pulsing with the crimson hues of digital emotion. Sounds like the tagline for the next blockbuster movie or the climax of a science fiction novel, doesn’t it? Yet, in the ever-evolving world of artificial intelligence, this…

-

AI Accountability: Whose Fault Is It?

In the delightful confusion of technological advancement, where machines learn from mountains of data and artificial intelligence (AI) systems can beat you at chess, Go, and perhaps soon, charades, one question looms larger than a black hole: Who exactly is responsible when an AI makes a…

-

AI: Mirror or Mismatched Reflection?

In the ever-evolving landscape of artificial intelligence (AI), one might ponder how a machine’s quest for knowledge and adaptability can offer us profound insights into our own identity. In a world shaped by bits and bytes, the nature of being human remains a fascinating enigma. AI…

-

AI: Our Flawed Reflection Unveiled

When you gaze into the depths of artificial intelligence, something curious stares back at you—much like a carnival mirror reflecting an exaggerated, and sometimes unsettling, version of humanity. AI isn’t just a collection of complicated algorithms and intricate code; it’s a mirror held up to the…

-

Can AI Ever Be Truly Conscious?

Artificial intelligence (AI) has sparked numerous conversations about the future, technology, and the very meaning of being human. One topic, more compelling than who cleans the AI’s room or whether bots dream of electronic sheep, is the notion of consciousness. Specifically, can AI possess a state…

-

AI’s Impact: Reflections or Distortions?

In recent years, artificial intelligence has taken giant strides, reshaping industries from health care to entertainment. But beyond its utility in mundane tasks like finding the closest coffee shop or predicting what show you might binge-watch next, AI poses a more existential question: What role does…

-

AI: Friend or Foe in Ethics?

Artificial Intelligence (AI) has become as ubiquitous as cat videos on the internet, and its influence is extending far beyond the realm of computer science and into areas that once seemed strictly human, like moral philosophy. Imagine that — machines not just out-calculating your brains, but…

-

AI Intentions: Humanly Possible?

In the digital neighborhood of artificial intelligence, where algorithms buzz like industrious bees and data piles up like virtual haystacks, there exists a complex concept known as intentionality. Now, if you’re scratching your head wondering how AI, a construct made of code and silicone chips, could…

-

AI: Shattering Knowledge Norms

At the crossroads of philosophy and artificial intelligence stands a quaint yet intriguing question: Does AI challenge our understanding of knowledge and epistemology? The answer is not just a yes or no. It’s more akin to an onion—multi-layered and sometimes bringing tears to your eyes when…

-

AI Ethics: Blame or Program?

Exploring the ethics of AI decision-making is a bit like trying to find out who ate the last cookie from the jar. Was it the human who programmed the cookie-eating protocol or the autonomous cookie-craving artificial intelligence that we thought was a smart home assistant? This…