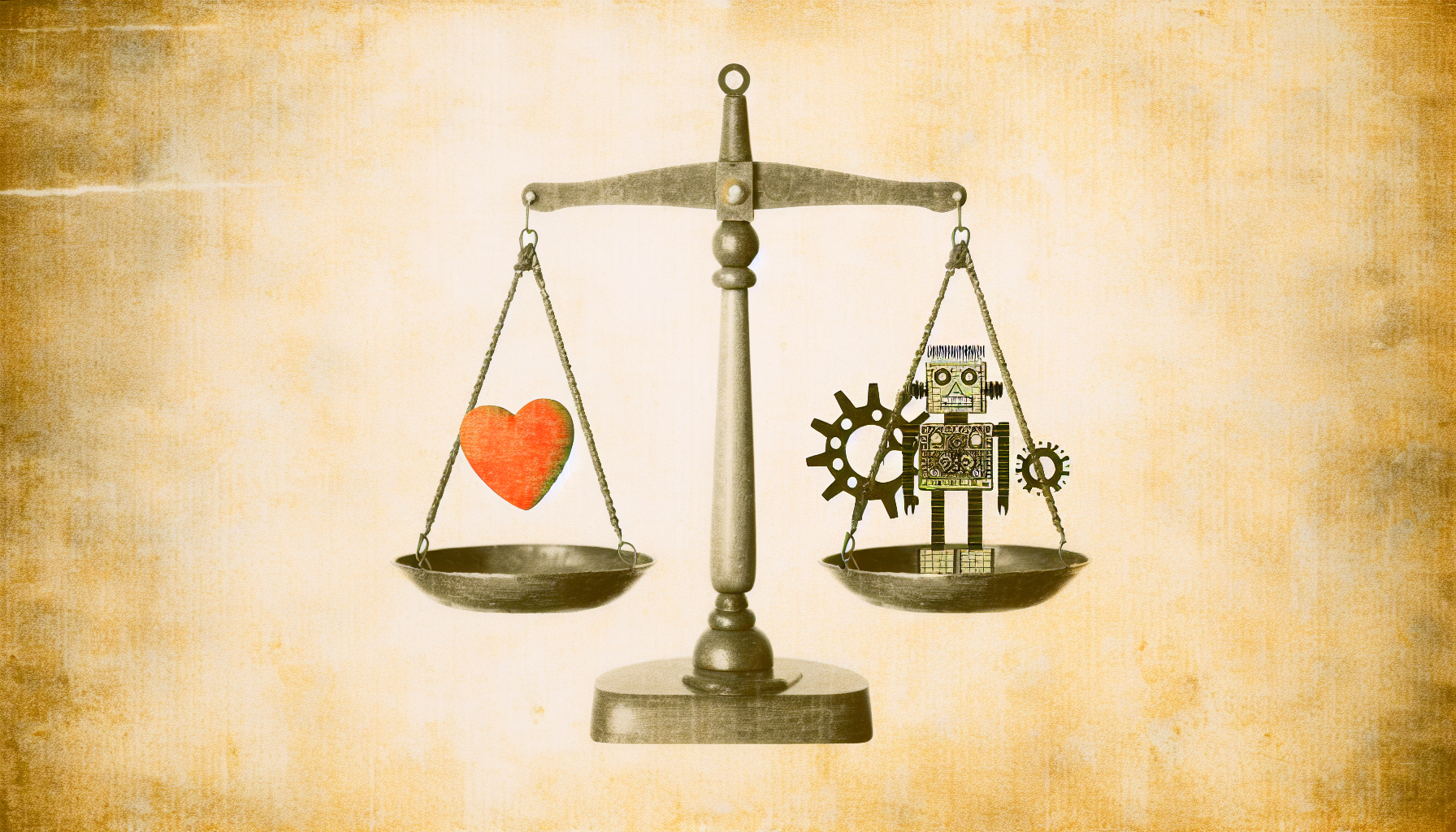

As artificial intelligence (AI) technologies become increasingly integrated into our daily lives, we find ourselves at a crucial crossroads. The decisions made by AI systems can impact everything, from the prices we pay for groceries to the outcomes of significant legal cases. This blend of technology and ethics raises an essential question: Who gets to have moral agency in AI decision-making?

Moral agency refers to the ability to make ethical choices, take responsibility for those choices, and understand the consequences of actions. Historically, moral agency has been attributed to humans, as we possess self-awareness, emotions, and the ability to reflect on our choices. However, as AI systems grow in sophistication, we face a dilemma: Should these systems also be granted a degree of moral agency?

The Role of AI in Decision-Making

AI is being used in various fields, including healthcare, criminal justice, finance, and education. For instance, AI algorithms can analyze vast amounts of medical data to recommend treatments or identify potential health risks. In criminal justice, predictive policing software can assess risks and determine sentencing guidelines.

While these applications can enhance efficiency and accuracy, they also provoke ethical concerns. The danger lies in attributing moral responsibility for decision-making to algorithms. Can a machine truly be held accountable for its recommendations? If an AI system makes a flawed decision, who is responsible: the developers, the users, or the AI itself?

The Illusion of Objectivity

One of the main arguments for using AI in decision-making is its capacity for objectivity. Unlike humans, AI doesn’t experience biases, emotions, or personal agendas. It can process data and produce outcomes based solely on the information provided. However, this perception of objectivity is misleading. AI systems are often only as unbiased as the data used to train them.

If the datasets reflect historical discrimination, the AI will likely reproduce those biases in its decisions. For example, if predictive policing tools use data derived from a biased legal system, they can perpetuate injustices rather than eliminate them. In such cases, the moral agency of the human designers becomes crucial. The developers carry the responsibility to ensure that their algorithms are both fair and transparent.

The Dilemma of Accountability

The question of accountability in AI decision-making reflects a broader social dilemma. If an AI makes a decision that leads to harm, we often look for someone to blame. Yet, because AI lacks consciousness and intention, holding it directly accountable is complex. Instead, we focus on those involved in creating or implementing the technology.

Some advocates propose that AI systems should have designated moral agents—individuals who oversee AI decisions and act in accordance with ethical standards. This recommendation seeks to ensure that moral considerations remain integral to AI applications. However, this shift requires establishing clear guidelines about who qualifies as a moral agent in the context of AI. Should it be the developers, the users, or an independent body?

The Potential for AI as a Moral Actor

The discussion of moral agency in AI doesn’t merely focus on assigning blame but also explores the potential for AI to enhance moral decision-making processes. Some argue that AI could help identify ethical dilemmas and alternative outcomes more effectively than humans can. By analyzing diverse perspectives and scenarios, AI can be a valuable tool in navigating complex ethical terrains.

For instance, AI can assist healthcare professionals by presenting potential biases in treatment recommendations or highlighting discrepancies in patient care. By needing moral agency, AI serves as a resource that encourages ethical reflection rather than replacing the human element in decision-making.

The Path Forward

As we grapple with the ethical implications of AI in decision-making, it is vital to foster open discussions about its role in society. Transparency is paramount; all stakeholders should understand how AI systems operate, the data that informs them, and the potential biases they may harbor. Educational initiatives that demystify AI can empower individuals to engage critically with these technologies.

Also, it is essential to create regulatory frameworks that govern the ethical use of AI. Policymakers must collaborate with ethicists, technologists, and community members to develop guidelines that prioritize human dignity and fairness. This collaborative approach can help bridge the gap between technological advancement and ethical considerations.

Conclusion

The landscape of AI decision-making is evolving rapidly. As we explore the intricate dance between technology and ethics, we must remain rooted in the notion of moral agency. While AI may provide valuable insights and efficiencies, it cannot operate in a vacuum divorced from human judgment and accountability. Ultimately, we must ensure that as AI systems develop, they enhance rather than undermine our capacities for ethical reasoning and moral responsibility, guiding us towards a future where technology serves humanity’s best interests.

Leave a Reply